Merriam-Webster recently named “slop” as its 2025 Word of the Year, citing the explosion of low-quality, AI-created digital content that now clogs all of our inboxes and social feeds. While employers and business leaders should see this news as a warning, this development can also be an opportunity to set yourself apart. By combining the power of AI with a healthy dose of human judgment, you can capture the authenticity that people will crave more than ever in 2026. As we ring in the new year, we’ll take a look at what slop is, talk about the problems it can cause, and provide you with some practical steps you can take to rid yourself of it.

What “AI Slop” Looks Like at Work

2025 was the year that AI slop showed up in every aspect of your job. No doubt you have started to see an increase in:

- Generic emails and corporate communications that all start to sound the same (sounding official but not saying much of anything)

- Business content that’s repetitive, vague, and quite possibly wrong

- Resumes and cover letters that almost sound too good to be true, exactly matching your job postings

- Performance self-evaluations or reviews containing flowery language and corporate jargon about “synergy” and “leveraging core competencies”

- Marketing copy that looks polished at first glance but is immediately forgettable

Why Employers Should Care

AI slop is annoying, no doubt. But it’s not just an inconvenience—it’s a real business risk. (See what we did there? Did you cringe when you read that last sentence?) Here are some reasons why you should be concerned about the proliferation of slop in your workplace.

- Brand erosion – Low-quality content lets customers, recruits, and employees know that you don’t care enough about them to put in the time to create quality work. Once that perception sets in, it’s hard to reverse.

- Productivity theater – AI slop often looks like productivity, because your employees are churning out lots of output in a short amount of time. But what they’re actually doing is creating more downstream work through the inevitable revisions, clarifications, and clean-up.

- Cultural damage – When employees are encouraged (explicitly or implicitly) to outsource their thinking to a robot, you start to see a drift away from human judgment and creativity. This is a dangerous shift for any organization.

- Legal exposure – Sloppy AI-generated policies, contracts, or employment communications can misstate legal obligations and conflict with existing policies.

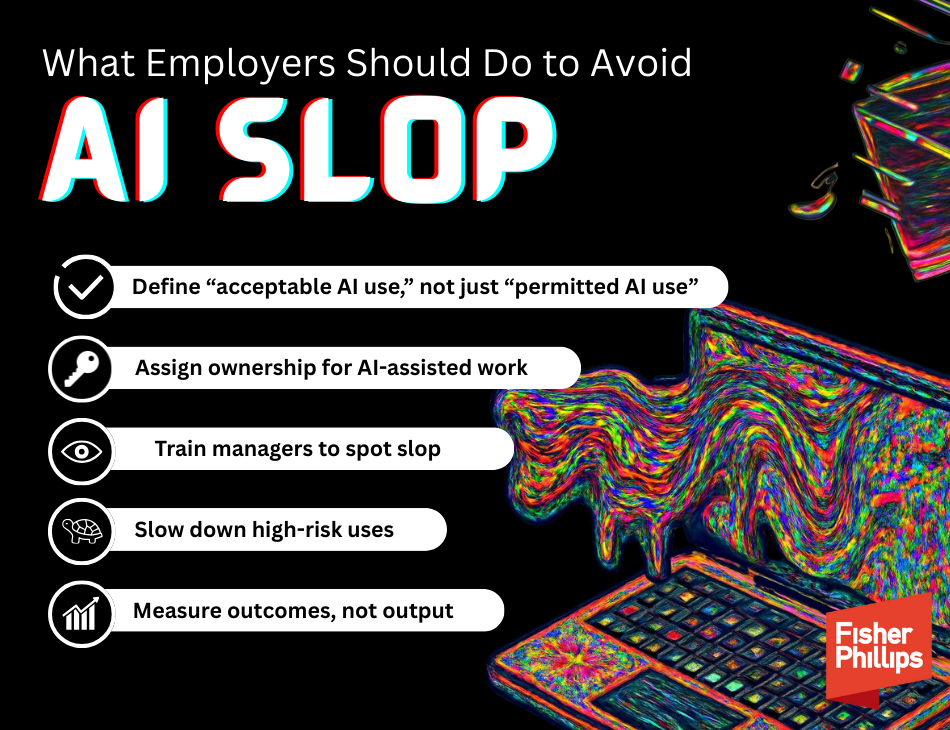

What Employers Should Do to Avoid AI Slop

The good news is that a few simple steps will help you use AI without creating slop. Here are five steps you can take to set guardrails that preserve human judgment, corporate accountability, and quality of work.

1. Define “acceptable AI use,” not just “permitted AI use”

The first generation of corporate AI policies released in the wake of ChatGPT’s release and the explosion of GenAI (think 2023-2024) are already outdated. If you look at your company policy, it probably focuses on “AI is allowed for X.” But that’s not enough these days. Instead, you need to go further and spell out:

- Where AI can assist and where it needs to be avoided

- Where human judgment must lead

- That AI output must always be reviewed before use

2. Assign ownership for AI-assisted work

Every AI-generated document should have a human owner responsible for accuracy, tone, and consistency with company values and policies.

3. Train managers to spot slop

Managers and content approvers don’t need to be AI experts, but they do need to recognize some of the telltale signs of AI usage so it can be edited out before release.

- Overly generic language that could apply to any role, department, or company

- Perfectly structured paragraphs that lack a human voice, specificity, or the natural friction that comes along with human writing

- Excessive use of em-dashes like this—often multiple per paragraph—to create the illusion of nuance

- “It’s not just X—it’s Y” construction, used repeatedly to inflate ordinary points into faux insights (like “It’s not just a change—it’s a revolution”)

- False confidence in incorrect or oversimplified statements, especially about legal, technical, or operational issues

- Repetition of the same idea (especially at the conclusion) using slightly different phrasing rather than adding new substance

- Buzzword stacking (“synergy,” “alignment,” “value creation,” “impactful outcomes”) without concrete examples

- Vague conclusions that gesture toward action without assigning ownership or next steps

4. Slow down high-risk uses

For legal, HR, compliance, and external communications, make sure you require human review before anything is delivered. It will be almost impossible for AI to generate sound communications in these areas without human customization, often a great deal of it.

5. Measure outcomes, not output

You will want your organization to shift performance conversations away from questions like “How fast was this produced?” and “How many pieces of content did you develop?” Instead, pivot towards considering whether the communication solved the problem and reduced the need for follow-up questions.

FP is All In on AI – But the Right Kind of AI

Don’t get us wrong. FP is a massive supporter of AI. We have an annual AI Conference bringing together thought leaders from across the country. We hold dozens of webinars each year about AI use (including our popular AI Forums). We’ve published hundreds of AI-related Insights. We have an active AI, Data, and Analytics Team. We offer products and services to help businesses master the use of AI. We understand the value it can bring businesses and corporate leaders.

But (with apologies to Spiderman’s Uncle Ben Parker), with great power comes great responsibility. And by following the steps we have outlined above, you can responsibly use AI without contributing to the scourge of slop.

Conclusion

If you have any questions, contact your Fisher Phillips attorney, the authors of this Insight, or any attorney in our AI, Data, and Analytics Practice Group. Make sure you are subscribed to the Fisher Phillips Insight System to stay updated.