Government Puts AI Companies on Notice About Boastful Advertising: 5 Practical Lessons for the Tech Sector

Insights

9.11.25

In a wakeup call to tech companies that develop artificial intelligence products, the Federal Trade Commission (FTC) recently cracked down on a large AI software company that couldn’t back up its AI-related claims with actual evidence of success. The August 28 final order is a good reminder that long-standing advertising principles apply equally to both traditional businesses and those marketing AI products and services. What happened in this case and what are five practical lessons tech companies can take from the government action?

Software Company Makes Bold Claims About AI Products – and Government Took Notice

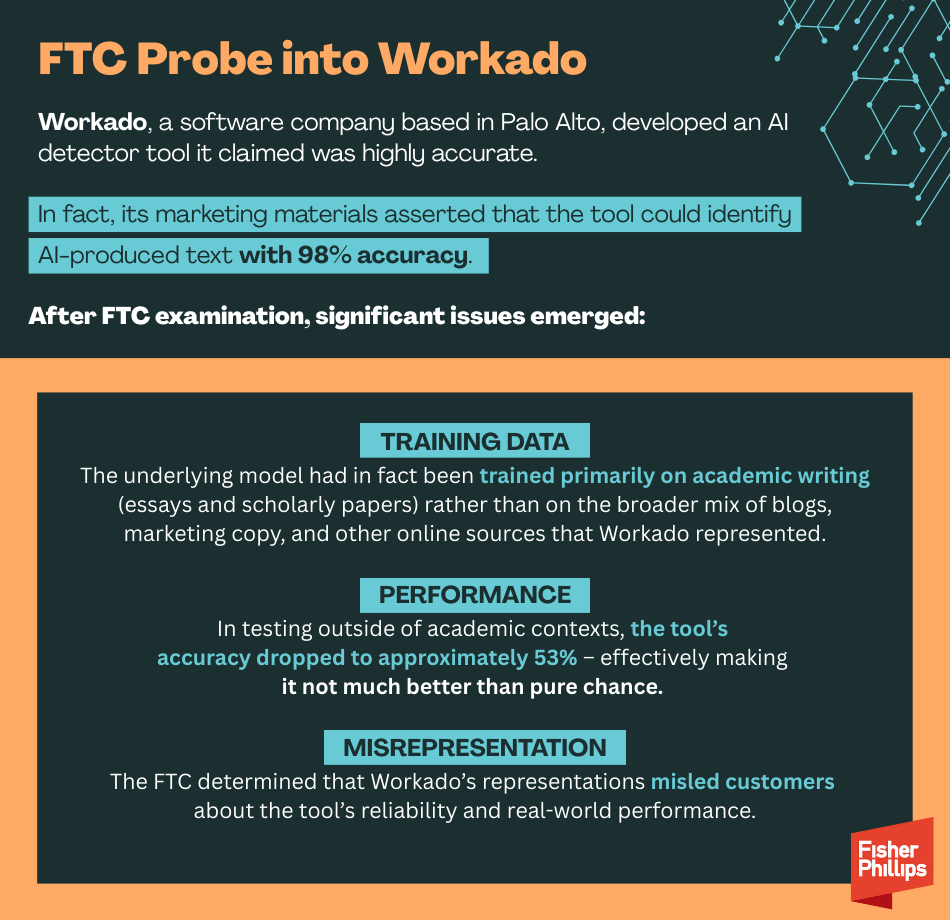

Workado, a software company based in Palo Alto, developed an AI detector tool it claimed was highly accurate. In fact, its marketing materials asserted that the tool could identify AI-produced text with 98% accuracy. This claim is particularly striking given that educators, publishers, and businesses are actively seeking reliable methods to distinguish human-authored content from text created by generative AI.

When the FTC examined Workado’s claims more closely, however, significant issues emerged:

- Training Data: Although Workado advertised its tool as capable of analyzing a wide range of content, the underlying model had in fact been trained primarily on academic writing, such as essays and scholarly papers, rather than on the broader mix of blogs, marketing copy, and other online sources that the company represented.

- Performance: In testing outside of academic contexts, the tool’s accuracy dropped to approximately 53% – effectively making it not much better than pure chance. The FTC called it “no better than a coin toss.”

- Misrepresentation: The FTC determined that Workado’s marketing materially overstated the product’s capabilities, and that these representations misled customers about the tool’s reliability and real-world performance.

FTC Comes Down Hard on Tech Company

As a result, the FTC approved a final consent order on August 28 that requires Workado to:

1. Stop making unsupported accuracy claims. Workado must stop making any representations about the effectiveness or “accuracy” of its AI Content Dectector unless those claims are not misleading and are supported by “competent and reliable evidence” at the time in which the statements are made.

2. Retain test data and evidence. The company must maintain documentation of how it establishes its performance claims, including testing data and analysis when related to the product’s efficacy.

3. Notify customers. The company must send out an FTC-drafted notice explaining the issue to users including informing them about the consent order and settlement, ensuring transparency regarding the tool’s corrected representation.

4. Report to the FTC. Workado must provide annual compliance reports to the government for four years.

5 Practical Lessons for AI Companies

Given this FTC order, here are some practical takeaways that can guide your approach to marketing your AI products:

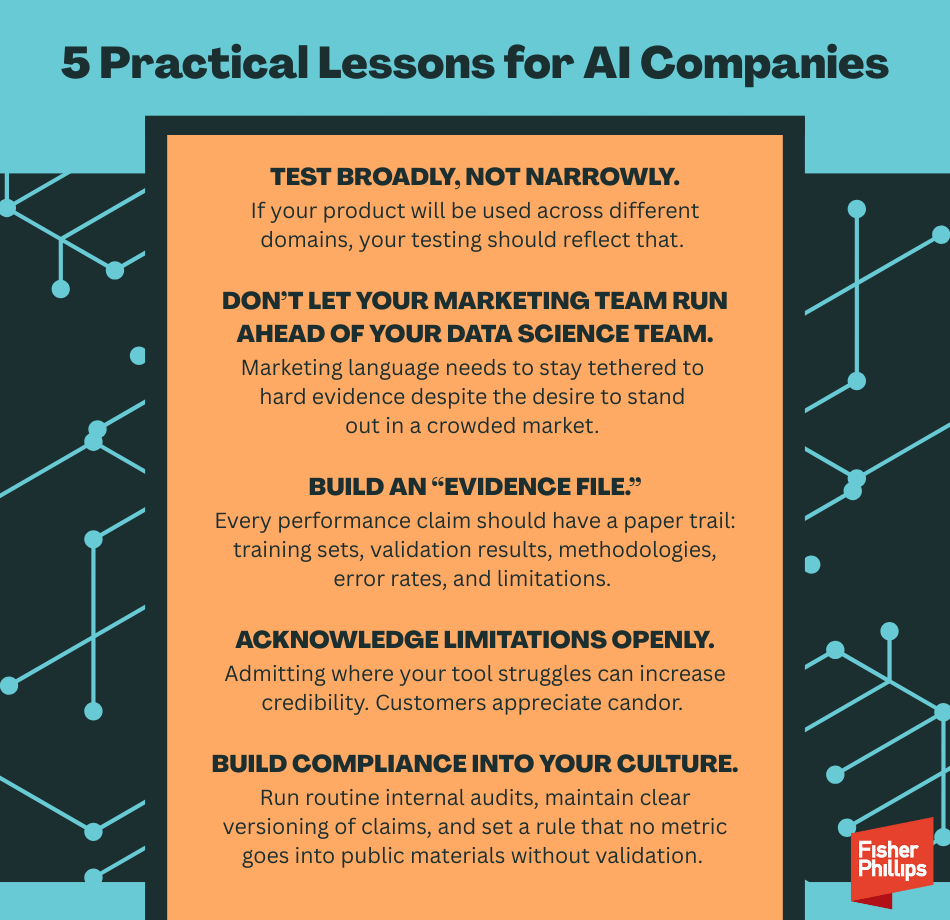

1. Test broadly, not narrowly.

If your product will be used across different domains, your testing should reflect that. A model trained on academic writing may look great on essays, but if customers are using it on social media content or business reports, the results can collapse. Don’t assume “works here” equals “works everywhere.”

2. Don’t let your marketing team run ahead of your data science team.

Ambitious claims often come from the desire to stand out in a crowded market. But marketing language needs to stay tethered to hard evidence. A practical step: set up a cross-functional review where technical staff vet marketing copy for accuracy before it goes public.

3. Build an “evidence file.”

Every performance claim should have a paper trail: training sets, validation results, methodologies, error rates, and limitations. If challenged by customers, competitors, or regulators, you’ll want that file at your fingertips as your insurance policy.

4. Acknowledge limitations openly.

Some founders fear that careful wording in describing AI products will dull the “wow factor.” Paradoxically, however, admitting where your tool struggles can increase credibility. Customers appreciate candor. A statement like “Our model performs best on structured text such as contracts and policies, but may be less accurate on informal writing” is better than vague promises of universal accuracy.

5. Build compliance into your culture.

You don’t need an in-house regulatory team to start. Small practices go a long way: routine internal audits, clear versioning of claims, and setting a rule that no metric goes into public materials without validation.

Conclusion

We will continue to monitor AI-related developments and provide the most up-to-date information directly to your inbox, so make sure you are subscribed to Fisher Phillips’ Insight System. If you have questions, contact your Fisher Phillips attorney, the authors of this Insight, or any attorney in our AI, Data, and Analytics Practice Group.

Related People

-

- Michael R. Greco

- Regional Managing Partner

-

- Karen L. Odash

- Associate